Archive

How Azure Stack HCI is forcing changes in your datacenter – free webinar

This Altaro FREE webinar, will talk about the key developments and how they will impact the datacenter management. Microsoft MVP Andy Syrewicze will be joined by fellow MVP and Azure Stack HCI expert Carsten Rachfahl to break down and demo live Azure Stack HCI.

Andy and Carsten will help in preparation for these major developments and if attendees have any questions about hybrid cloud or Azure Stack HCI, they can get them answered by these experts during the webinar Q&A.

Learn more and register: How Azure Stack HCI is forcing changes in your datacenter

Don’t miss out on this comprehensive lesson on how to navigate and integrate Azure Stack HCI into your datacenter management.

Save your seat for March 23rd!

Azure Resource Visualiser: Visualising Resources in the Azure Portal

I have been working on a series on Architecture Templates for Azure to streamline the Cloud adoption and migration of workloads to Azure. Those templates that I called Resource Zones are pre-defined architecture that considers the workloads and the Azure Services that are enabled for particular customer.

Each Resource Zone essentially represents the common elements, such as app, db, storage, which shares network connectivity, monitoring and IAM across instances of these archetypes and must be in place to ensure that applications have access to the shared components when deployed.

Coincidence or nor, recently, I noted the Azure Resource Visualiser in the Azure Portal. How cool is the new feature! It lets you visualise the Resources deployed on a Resource Group. It is great to visualise the Resources in a Azure Resource Group and identifying their dependencies. You can select which resources to show and save the image.

Our new SIEM tool: Microsoft Azure Sentinel, intelligent security analytics for your entire enterprise

As we know, many legitimate threats go unnoticed and with the unsurprising high volume of alerts and your team spending far too much time in infrastructure setup or BAU tasks, you need a solution that empowers your existing SecOps team to see the threats clearer and eliminate the distractions.

That’s why we reimagined the SIEM tool as a new cloud-native solution called Microsoft Azure Sentinel. Azure Sentinel provides intelligent security analytics at cloud scale for your entire enterprise. Azure Sentinel makes it easy to collect security data across your entire hybrid organization from devices, to users, to apps, to servers on any cloud.

Collect data across your enterprise easily – With Azure Sentinel you can aggregate all security data with built-in connectors, native integration of Microsoft signals, and support for industry standard log formats like common event format and syslog.

Analyze and detect threats quickly with AI on your side – Security analysts face a huge burden from triaging as they sift through a sea of alerts, and correlate alerts from different products manually or using a traditional correlation engine.

Investigate and hunt for suspicious activities – Graphical and AI-based investigation will reduce the time it takes to understand the full scope of an attack and its impact. You can visualize the attack and take quick actions in the same dashboard.

Automate common tasks and threat response – While AI sharpens your focus on finding problems, once you have solved the problem you don’t want to keep finding the same problems over and over – rather you want to automate response to these issues.

More Information:

https://azure.microsoft.com/en-us/services/azure-sentinel/#documentation

Azure Sentinel preview is free

There will be no charges specific to Azure Sentinel during the preview. Pricing for Azure Sentinel will be announced in the future and a notice will be provided prior to the end of the preview. Should you choose to continue using Azure Sentinel after the notice period, you will be billed at the applicable rates

Password-less VM – The importance of securing your IaaS Linux VM in the Public Cloud

When creating a VM in the Public Cloud, some would think that the Provider would be responsible for its security. Guess what, you are responsible for its security.

A common misunderstanding is assuming that a strong password would do the job of securing the VM access. To prove that is not good enough, yesterday I created a VM in Azure for a Containers Lab work and in less than 9hrs, I had 8712 failed login attempts as it shows in the picture above.

Creating a more Secure VM

So, what should you do to protect the access to my public VM?

“Password-less VM”: Using SSH public key, instead of password, will greatly increase the difficulty of brute-force guessing attack.

A “password-less” VM includes:

- A username that is not standard such as “root” or “admin”: Azure already help you with that, by not allowing you to create “root” or “admin” as a username. Also note that in Linux, the username is case sensitive.

- No password for the user; no password-based login permitted. Instead, configure Private key/certificate SSH authentication: That’s a must!

- A randomized public SSH port.

Verifying

Using the below Linux command line, you should see a line showing: PasswordAuthentication no

$ sudo tail -n 10 sshd_config

Detailed Steps

Check out the Quick steps: Create and use an SSH public-private key pair for Linux VMs in Azure article on how to configure those steps.

What else should you do?

Azure offers great protection options for Azure VM’s. It’s definitely a must that you:

- Install Microsoft Monitoring Agent to enable Azure Security Center which will help you prevent, detect, and respond to threats. Security Center analyzes the security state of your Azure resources. When Security Center identifies potential security vulnerabilities, it creates recommendations. The recommendations guide you through the process of configuring the needed controls. For detailed information see https://docs.microsoft.com/en-us/azure/security-center/quick-onboard-linux-computer

- Configure Security Policies, which drives the security recommendations you get in Azure Security Center.

- Configure Just-in-time (JIT) virtual machine (VM) access which can be used to lock down inbound traffic to your Azure VMs, reducing exposure to attacks while providing easy access to connect to VMs when needed. Configure custom ports and customize the following settings:

Protocol type– The protocol that is allowed on this port when a

request is approved.

Allowed source IP addresses- The IP ranges that are allowed on this port when a request is approved.

Maximum request time– The maximum time window during which a specific port can be opened.

- Configure Network security groups and rules to control traffic to virtual machines

- Configure dedicated network connection between your on-premises network and your Azure vNets, either through a VPN or through Azure ExpressRoute: Production services should not be exposing SSH to the internet.

Stay safe!

Article also published in my Linkedin page: https://www.linkedin.com/pulse/password-less-vm-importance-securing-your-iaas-linux-cardoso/?published=t

The transition to adopting cloud services is unique for every organization. What does yours look like?

- Andy Syrewicze (Microsoft MVP and Technical Evangelist – Altaro),

- Didier Van Hoye (Microsoft MVP and Infrastructure Architect – FGIA),

- Thomas Maurer (Microsoft MVP and Cloud Architect – itnetX)

There are limited seats, REGISTER NOW to save your spot

For a FREE LIVE Webinar with will focus on cloud technologies and presented as a panel-style discussion on the possibilities of cloud technologies coming out of Microsoft, including:

- Windows Server 2019 and the Software-Defined Datacenter

- New Management Experiences for Infrastructure with Windows Admin Center

- Hosting an Enterprise Grade Cloud in your datacenter with Azure Stack

- Taking your first steps into the public cloud with Azure IaaS

After watching the experts discuss the details, you’ll see that the cloud doesn’t have to be an all or nothing discussion. This webinar will prepare you for your journey by revealing the available options and how to make the most out of them!

It is a great opportunity to ask industry experts as they share their experiences working with many customers worldwide.

WHEN:

Wednesday June 13th 2018 – Presented live twice on the day

- Session 1: 2pm CEST – 5am PDT – 8am EDT

- Session 2: 6pm CEST – 9am PDT – 12pm EDT

- Twitter: https://goo.gl/f8v9mH

- Facebook: https://goo.gl/wDe7vN

- LinkedIn: https://goo.gl/go5JpL

Remove yourself as guest user of a partner organisation AD tenant

In the past, when working with partner organisations where you were invited to access shared resources or applications, in order to get your access removed/revoked you would need to contact their Global Admin and ask them to remove you. That was not an easy task!

Now, Microsoft released a Azure B2B update which will allow you to remove yourself from the partner organisation AD tenant

When a user leaves an organization, the user account is “soft deleted” in the directory.

By default, the user object moves to the Deleted users area in Azure AD but is not permanently deleted for 30 days. This soft deletion enables the administrator to restore the user account (including groups and permissions), if the user makes a request to restore the account within the 30-day period.

If you permanently delete a user, this action is irrevocable.

These highly-requested capabilities simplify and modernize your collaboration. They also empower your partner users and help you with your GDPR obligations.

You can find more information about this an other new exciting features of Azure B2B at : https://docs.microsoft.com/en-au/azure/active-directory/active-directory-b2b-leave-the-organization

If you’re interested in viewing or deleting personal data, please see the Azure Data Subject Requests for the GDPR article. If you’re looking for general info about GDPR, see the GDPR section of the Service Trust portal.

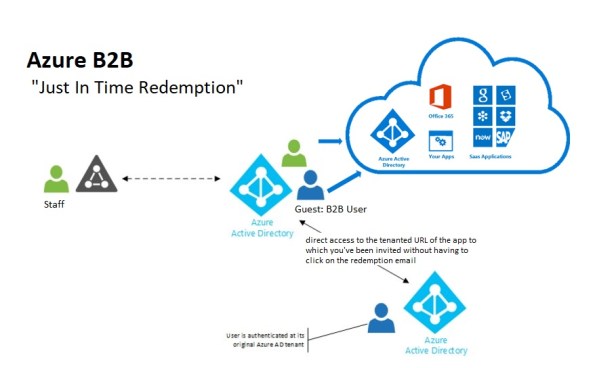

New Azure B2B Invite process.

New Azure B2B Invite process: Redemption through a direct link

New Azure B2B Invite process: Redemption through a direct link

“Just in Time Redemption”.

In the past, in order for your guest/partner users to access a shared resources utilising Azure B2B Collaboration, they would have had to be invited by email to access resources/apps on your Azure Tenant. When receiving the email, your guest/partner clicks on the invitation link which will trigger its acceptance and consequent adding the guest/partner account as a guest user in your tenant and the providing access to the resources or apps you have configured.

Now, although that option still available, your guest/partner users can simply access the application you’ve invited them to. How? You can invite a guest/partner user by sending him/her a direct link to a shared app.

NEW Modernized Consent Experience. When a guest/partner user accesses your organization’s resources for the first time, they will interact with a brand new, simple, modernized consent experience.

Image source:https://cloudblogs.microsoft.com/enterprisemobility/2018/05/14/exciting-improvements-to-the-b2b-collaboration-experience/

Image source:https://cloudblogs.microsoft.com/enterprisemobility/2018/05/14/exciting-improvements-to-the-b2b-collaboration-experience/

After any guest user signs in to access resources in a partner organization for the first time, they see a Review permissions screen.

The guest/partner user must accept the use of their information in accordance to the inviting organization’s privacy policies to continue

Upon consent, the guest/partner users will be redirected to the application shared by you.

How it works:

-

-

- You want your guest/partner user to access a specific application

- You add them as a guest user to your organization (In the Azure Portal, go to Azure Active Directory -> Users -> New Guest User)

-

- In the message invitation, type the link to the application you want them to have access to

- Now, your guest/partner user will only have to click on the link to the application to immediately access it after giving consent.

It’s very simple isn’t it?

Do you have questions on Containers? #AskBenArmstrong

Fundamentally, Containers are an isolated, resource controlled, and portable runtime environment which runs on a host machine or virtual machine and allows you to run an application or process which is packaged with all the required dependencies and configuration files on its own.

When you containerize an application, only the components needed to run this application and of course the application itself are combined into an image, which are used to create the Containers.

How are you utilising containers? Do you have questions on Containers? On Tuesday, 24th April, Microsoft Program Manager Ben Armstrong, will be answering your questions on Containers. It is a rare opportunity. Don’t miss out.

- Date and Time: Tuesday, Apr 24, 2018, 4pm CEST (7am PDT / 10am EDT) Duration: Approx. 1 hour

- Date and Time: Tuesday, Apr 24, 2018, 10am PDT / 1pm EDT (7pm CEST) Duration: Approx. 1 hour

You can also ask questions through twitter until Tuesday by including #AskBenArmstrong.

Serial Console access for both #Linux and #Windows #Azure VMs #COM1 #SerialConsole

Source: https://azure.microsoft.com/en-us/blog/virtual-machine-serial-console-access/

Now, you can debug fstab error on a Linux VM for example, with direct serial-based access and fix issues with the little effort. It’s like having a keyboard plugged into the server in Microsoft datacenter but in the comfort of your office.

Serial Console for Virtual Machines is available in all global regions! This serial connection is to COM1 serial port of the virtual machine and provides access to the virtual machine and are not related to virtual machine’s network / operating system state.

All data is sent back and forth is encrypted on the wire.All access to the serial console is currently logged in the boot diagnostics logs of the virtual machine. Access to these logs are owned and controlled by the Azure virtual machine administrator.

You can access it by going to the Azure portal and visiting the Support + Troubleshooting section.

Security Access Requirements

Serial Console access requires you to have VM Contributor or higher privileges to the virtual machine. This will ensure connection to the console is kept at the highest level of privileges to protect your system. Make sure you are using role-based access control to limit to only those administrators who should have access. All data sent back and forth is encrypted in transit.

Access to Serial console is limited to users who have VM Contributors or above access to the virtual machine. If your AAD tenant requires Multi-Factor Authentication then access to the serial console will also need MFA as its access is via Azure portal.

How to enable it:

For Linux VMs: this capability requires no changes to existing Linux VM’s and it will just start working.

For Windows VMs: it requires a few additional steps to enable it:

- Virtual machine MUST have boot diagnostics enabled

- The account using the serial console must have Contributor role for VM and the boot diagnostics storage account.

- Open the Azure portal

- In the left menu, select virtual machines.

- Click on the VM in the list. The overview page for the VM will open.

- Scroll down to the Support + Troubleshooting section and click on serial console (Preview) option. A new pane with the serial console will open and start the connection.

Note: For all platform images starting in March, Microsoft have already taken the required steps to enable the Special Administration Console (SAC) which is exposed via the Serial Console.

Key features of the new Microsoft Azure Site Recovery Deployment Planner

Azure Site Recovery Deployment Planner is now GA with support for both Hyper-V and VMware.

Disaster Recovery cost to Azure is now added in the report. It gives compute, storage, network and Azure Site Recovery license cost per VM.

ASR Deployment Planner does a deep, ASR-specific assessment of your on-premises environment. It provides recommendations that are required by Azure Site Recovery for successful DR operations such as replication, failover, and DR-Drill of your VMware or Hyper-V virtual machines.

Also, if you intend to migrate your on-premises workloads to Azure, use Azure Migrate for migration planning. Azure Migrate assesses on-premises workloads and provides guidance

Key features of the tool are:

- Estimated Network bandwidth required for initial replication(IR) and delta replication.

- Storage type(standard or premium storage) requirement for each VM.

- Total number of standard and premium storage accounts to be provisioned.

- For VMware, it provides the required number of Configuration Server and Process Server to be deployed on on-prem.

- For Hyper-V, it provides additional storage requirements on on-premises.

- For Hyper-V, the number of VMs that can be protected in parallel (in a batch) and protection order of each batch for successful initial replication.

- For VMware, the number of VMs that can be protected in parallel to complete initial replication in a given time.

- Throughput that ASR can get from on-premises to Azure.

- VM eligibility assessment based on number of disks, size of the disk and IOPS, OS type.

- Estimate DR cost for the target Azure region in the specific currency.

When to use ASR Deployment Planner and Azure Migrate?

- DR from VMware/Hyper-V to Azure

- Migration from VMware to Azure

Download the tool and learn more about VMware to Azure Deployment Planner and Hyper-V to Azure Deployment planner.