Archive

Hyper-V Windows 20012 improvments and comparison

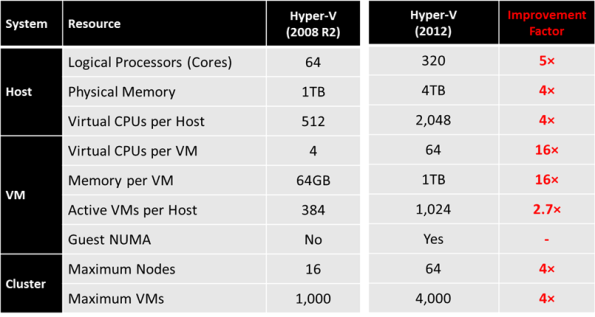

Significant improvements have been made across the board, with Hyper-V now supporting increased cluster sizes, a significantly higher number of active virtual machines per host, and additionally, more advanced performance features such as in-guest Non-Uniform Memory Access (NUMA).

The tables below shows the improvement Microsoft has done with Windows 20012 Hyper-V :

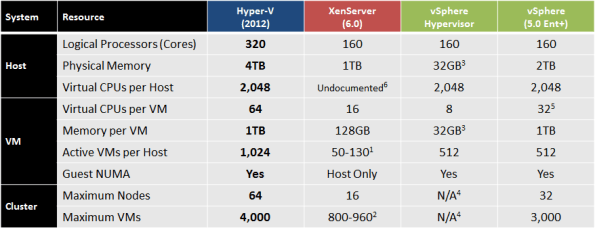

The table also shows that both Windows Server 2012 Hyper-V and vSphere 5.0 Enterprise Plus deliver up to 1TB of memory to an individual virtual machine, however, one aspect to bear in mind when creating virtual machines of this size, is the vRAM (Virtual Machine Memory) entitlement with vSphere 5.0.

- Each vSphere 5.0 Enterprise Plus CPU license comes with a vRAM entitlement of 96GB vRAM, and on a 2 CPU physical host, this would equate to 192GB vRAM added to a ‘vRAM Pool’

- The 1TB virtual machine would consume 96GB of the vRAM allocation (this is an upper limit established for an individual VM, and was one of the results of customer feedback around the original vRAM licensing announcements)

- This would leave only 96GB of vRAM for use by other virtual machines, restricting scale. The only option to overcome this would be for the customer to purchase additional vSphere 5.0 licenses at considerable expense. This is on top of the extra administrative overhead of monitoring and managing vRAM entitlements.

Notes:

VMM 2012 SP1 storage providers supports : new SMP storage provider

With the release of VMM 2012 SP1 CTP2, Microsoft added support to the SMP storage provider.

Now VMM 2012 with SP1, supports the following types of storage providers:

- SMI-S CIM–XML

- SMP

Besides the new SMP provider type, the VMM 2012 SP1 release adds the following new functionality:

- Supports auto (dynamic) iSCSI target systems, such as the Dell EqualLogic PS Series. System Center 2012 – Virtual Machine Manager supported only static iSCSI target systems.

- Supports the thin provisioning of logical units through VMM. Your storage array must support thin provisioning, and thin provisioning must be enabled for a storage pool by your storage administrator

To evaluate the storage enhancements in this CTP, you must use the following storage arrays.

| Provider Type | Supported Arrays |

| SMI-S CIM-XML | See the “Supported Storage Arrays” section of Configuring Storage Overview for the supported storage arrays. |

| SMP | Dell EqualLogic PS Series using iSCSI |

Solutions for Private, Public, and Hybrid Clouds and Introducing Windows Server 2012 : Free e-books

Introducing Windows Server 2012

If you are looking for an early, high-level view of Windows Server 2012, this guide introduces its new features and capabilities, with scenario-based insights demonstrating how the platform can meet the needs of your business. Click here to download.

Solutions for Private, Public, and Hybrid Clouds

Make sure to read chapter one for information on why cloud computing is the most efficient and cost-effective way to deliver computing resources to users according to your business needs:

Chapter 1: Microsoft Solutions for Private, Public and Hybrid Clouds. Click here.

Chapter 2: From Virtualization to the Private Cloud – Click here

Chapter 3: Public and Hybrid Clouds. Click here

SYSRET 64-bit OS privilege vulnerability on Intel, DOES NOT AFFECT HYPER-V

Last week US-CERT warned of guest-to-host VM escape vulnerability and it was reported that an issue on Intel based servers could lead to a “break out” from a VM to the host in certain virtualisation products, including Microsoft : “A ring3 attacker may be able to specifically craft a stack frame to be executed by ring0 (kernel) after a general protection exception (#GP). The fault will be handled before the stack switch, which means the exception handler will be run at ring0 with an attacker’s chosen RSP causing a privilege escalation” : http://www.kb.cert.org/vuls/id/649219

Affected vendors include Intel Corp., FreeBSD, Microsoft, NetBSD, Oracle, RedHat, SUSE Linux and Xen.

But Hyper-V is NOT Affected By VU#649219 VM “Break Out”.

I’ve asked the Microsoft Hyper-V product team Redmond if Hyper-V was actually affected and as per their answer:

•The problem does affect the 64-bit OS’s on Intel hardware, but Hyper-V is not affected.

•This problem will not lead to break outs from Hyper-V VMs.

•Windows 8 is not affected

•Windows Server 2012 is not affected.

This was covered as well by Aidan Finn : http://www.aidanfinn.com/?p=12838

Hyper-V Windows 2012: Enhanced Storage Capabilities. How does VMware compare

Windows Server 2012 Hyper-V introduces a number of enhanced storage capabilities to support the most intensive, mission-critical of workloads:

• Virtual Fiber Channel – Enables virtual machines to integrate directly into Fiber Channel Storage Area Networks (SAN), unlocking scenarios such as fiber channel-based Hyper-V Guest Clusters.

•Support for 4-KB Disk Sectors in Hyper-V Virtual Disks. Support for 4,000-byte (4-KB) disk sectors lets customers take advantage of the emerging innovation in storage hardware that provides increased capacity and reliability.

•New Virtual Hard Disk Format. This new format, called VHDX, is designed to better handle current and future workloads and addresses the technological demands of an enterprise’s evolving needs by increasing storage capacity, protecting data, improving quality performance on 4-KB disks, and providing additional operation-enhancing features. The maximum size of a VHDX file is 64TB.

•Offloaded Data Transfer (ODX). With data transfer support, the Hyper-V host CPU can concentrate on the processing needs of the application and offload storage-related tasks to the SAN, increasing performance.

Hyper-V provides a number of advantages over VmWare, with Hyper-V providing highest levels of availability and performance. Hyper-V also gives you the ability to utilize Device Specific Modules, also known as DSMs, produced by storage vendors, in conjunction with the Multipath I/O framework within Windows Server, ensures that customers run their workloads on an optimized configuration from the start, as the storage vendor intended, providing the highest levels of performance and availability. (Note: Only the Enterprise and Enterprise Plus editions of vSphere 5.0, through a feature known as ‘vStorage APIs for Multipathing’, provide this capability, meaning customers have to upgrade to higher, more costly editions in order to unlock the best performance from their storage investments.)

Also, by integrating Windows Server 2012 Hyper-V with an ODX-capable storage array, many of the storage-related tasks that would normally use valuable CPU and network resources on the Hyper-V hosts, are offloaded to the array itself, executing much faster, increasing performance significantly, and unlocking extra resources on the hosts themselves.

How does VMware compare?

| Capability | Windows Server 2012 RC Hyper-V | VMware ESXi 5.0 | VMware vSphere 5.0 Enterprise Plus |

| Virtual Fiber Channel | Yes | Yes | Yes |

| 3rd Party Multipathing (MPIO) | Yes | No | Yes (VAMP) |

| Native 4-KB Disk Support | Yes | No | No |

| Maximum Virtual Disk Size | 64TB VHDX | 2TB VMDK | 2TB VMDK |

| Maximum Pass Through Disk Size | Varies | 64TB | 64TB |

| Offloaded Data Transfer | Yes | No | Yes (VAAI) |

System Center VMM 2012 SP1 CTP2: What’s new

Microsoft announced the availability of CTP2 of System Center 2012 SP1.

With support for Windows 2012 and SQL 2012 introduced in CTP1, here are what’s more :

. Network Virtualization: Introduced in CTP1, this release adds support for using DHCP to assign customer addresses and for using either the IP rewrite or IP encapsulation mechanism to virtualize the IP address of a virtual machine.

· VHDX support: Introduced in CTP1, you can now convert from .vhd to .vhdx. In addition, placement determines the format of a VHD based on the OS of the destination host (when you create a virtual machine with a blank virtual hard disk), and the provisioning of a physical computer as a Hyper-V host supports the use of a .vhdx file as the base operating system image.

· Storage Enhancements: Support for the new Windows Standards-Based Storage Management service, which enables you to discover storage by using multiple provider types. In addition, this release adds support for the thin provisioning of logical units, and for the discovery of Serial Attached SCSI (SAS) storage.

· Provisioning a Hyper-V Host Enhancements: Support for performing deep discovery to retrieve detailed information about physical network adapters.

· Support for VMM Console Add-Ins: You can now create Add-Ins and extend the VMM console. Add-Ins allow you to enable new actions or additional configuration for VMM objects by writing an application that uses context passed about the selected VMM objects. You can also embed custom WPF UI or web portals directly into the console’s main views to provide a more fully-integrated experience.

In App Controller, you can deploy and manage in a single console, applications running in Windows Azure PaaS, IaaS, on premises VMM clouds, and clouds provided by hosting providers. In addition, there are the following new features:

· Windows Azure Virtual Machine support: In addition to supporting Windows Azure PaaS workloads, App Controller adds support to the newly announced IaaS virtual machines in CTP2. You can easily deploy, manage, and operate Windows Azure Virtual Machines.

· Copy VMs from private cloud to public cloud: In CTP2, you can select a VMM private cloud VM and copy it to Windows Azure. App Controller makes upload disk, convert settings, and deploying a new virtual machine into an easy migrate flow!

· Hosting Provider support: In addition to on premises VMM private cloud and Windows Azure public cloud, App Controller supports connecting to VMM clouds at a hosting provider. You can connect to a hosting provider and manage user access to these clouds. You can also deploy, manage, and operate virtual machines at the hosting provider.

Also, check the the Project code-named “Service Provider Foundation” for System Center 2012 Service Pack 1 (SP1) provides a rich set of web services that manages Virtual Machine Manager (VMM) for System Center 2012 SP1. The infrastructure of Virtual Machine Manager is exposed through “Service Provider Foundation” as a web service. Because of this, you can manage this System Center component through the web services; for example, you can create or delete virtual machines.

“Service Provider Foundation” provides a web service that acts as an intermediary between web-based administration client and server applications. The web service supports REST-based requests using the OData protocol. The web service handles these requests through Windows PowerShell scripts to communicate and manage server applications.

VMM 2012 : SMI-S Providers : Supported Storage Arrays

VMM 2012 : the following storage arrays supports the new storage automation features

List Updated up to May 2, 2012

Note: For the SMI-S provider type, a value of “proxy” indicates that you must install the SMI-S provider on a server that the VMM management server can access over the network by IP address or by FQDN. Do not install the SMI-S provider on the VMM management server itself.

| Manufacturer/Series | Model | Download Link | Protocol | Minimum Controller Firmware | SMI-S Provider Type | Provider Version |

| DellCompellent | Storage Center | Dell Compellent Solutions | iSCSI/FC | SC 5.5.4 and later,EM 5.5.5 and later | Proxy* | 1 |

| EMCSymmetrix | VMAX/VMAXe | EMC PowerLink | FC | Enginuity 5875 or later | Proxy* | 4.3 |

| EMC/VNX | VNX | EMC PowerLink | iSCSI | Flare 31 or later | Proxy* | 4.3 |

| EMC/CX4 | All | EMC PowerLink | iSCSI/FC | Flare 30 | Proxy* | 4.3 |

| HP/P10000 | V800/V400 | Embedded | iSCSI/FC | HP 3PAR InForm OS 3.1.1.P10 | Embedded | 1.4 |

| HP/P2000 G3 | Embedded | iSCSI/FC | TS240 | Embedded | 1.5 | |

| HP/P6000 | P6300/P6500 | HP | iSCSI/FC | 1000 0000 | Proxy* | 1.4 |

| IBM/DS8000 | 941/951/961 | Embedded | FC | Embedded | 5.6.x5.7.x | |

| IBM/XIV | 2810/2812 (Gen 2) | Embedded | iSCSI/FC | Embedded | 10.2.010.2.1,10.2.2, 10.2.4.x | |

| IBM/XIV | 2810/2812 (Gen 3) | Embedded | iSCSI/FC | Embedded | 11.011.1 | |

| NetApp/FAS | All | NetApp NOW | iSCSI/FC | 7.2.5 (7-mode) | Proxy* | 4.0.1 |

20th Australian System Administrators’ Conference

The 20th Australian System Administrators’ Conference (a milestone event for Systems Administration in Australia) will be held 2 – 5 October in the Hilton Cairns Hotel.

This is the premier Systems Administration event in the Asia-Pacific region.

The Technical Program is being held 2-3 October 2012; followed by two days of Tutorials on 4 and 5 October 2012

I will be presenting the session : Private Cloud with Windows 2012 Hyper V and System Center VMM 2012

Registratiosn are now open : http://conference.sage-au.org.au/files/registration-form_31052012

More info : http://conference.sage-au.org.au/

See you there!!

Windows 2012 Release Candidate : Available

Windows Server Home Page:

http://www.microsoft.com/en-us/server-cloud/

Windows Server 2012 Release Candidate: http://technet.microsoft.com/en-us/evalcenter/hh670538.aspx

Windows Server 2012 Remote Server Administration Tools (RSAT) for Windows 8 Client:

http://www.microsoft.com/en-us/download/details.aspx?id=28972

Video Demos of Network Virtualization, Stroage Spaces, Live Migration, Server Management

High Availabilty. Why Hyper-V delivers better

That’s kind of interesting subject to talk about

Taking as a sample 3 cluster node, where every node has 1(one) connection to a shared storage.

By using VmWare, if one of the VmWare hosts ever lose the IP storage connectivity ( an fault on the ISCSI nic for example ), your Virtual Machines are on the dark, you will probably receive an Blue Screen. The VmWare monitoring will try to start (vmotion) the Virtual machine on the same host or on any other host that has storage connectivity.

Now, the clever solution from the Hyper-V

if the one of the Hyper-V hosts lose IP storage connectivity ( an fault on the ISCSI nic for example ), the Hyper-V will redirect the Storage traffic to the CSV and the VM still working : no blue screen, no restart.

In a Hyper-V Failover Cluster with CSV, all storage I/O is Direct I/O meaning each node hosting a virtual machine(s) is writing directly (via Fibre Channel, iSCSI, or SAS connectivity) to the CSV volume. But in case of lost of Storage connectivity in one of the hosts the traffic will be redirected over the CSV network to another node Host in the cluster which still has direct access to the storage supporting the CSV volume. This functionality prevents the loss of all connectivity to storage. Instead, all storage related I/O is redirected over the CSV network. This is very powerful technology as it prevents a total loss of connectivity thereby allowing virtual machine workloads to continue functioning.

Of course there are other fault scenarios to consider and yes, I know that the recommedation is to have redundant storage connection, but that’s kind of expensive solution and does not take the fact that the Hyper-V solution is more clever.

If you ever experienced this situation I would like to hear your input.